The Emergence Of “Safety Layers,” Religion In The Era of AI, Angel Thesis, & Founder Truths

Tech will protect us in exciting new ways. Lets explore safety layers, religion in the age of AI, and celebrate the “founding teams” that really make ideas happen.

Edition #32 of Implications.

This edition explores forecasts and implications around: (1) the much needed role of “safety layers” in our everyday lives, (2) how religion may complement the “all-knowing” nature of AI, (3) a critical truth behind “founders” and honoring one that was lost, and (4) some surprises at the end (including latest angel thesis!), as always.

If you’re new, here’s the rundown on what to expect. This ~monthly analysis is written for founders + investors I work with, colleagues, and a select group of subscribers. I aim for quality, density, and provocation vs. frequency and trendiness. We don’t cover news; we explore the implications of what’s happening. My goal is to ignite discussion, socialize edges that may someday become the center, and help all of us connect dots.

If you missed the big annual analysis or more recent editions of Implications, check out recent analysis and archives here. A few recommendations based on reader engagement:

As LLMs remember memorize our conversations, preferences, and purchases, the value of sharing selective access to this memory with others will be the equivalent of a mind-meld. Here we discuss the implications of “Me becomes we” which has generated a lot of discussion since publication.

In April, we forecasted a new chapter in the ongoing battle to be the top interface layer, enabled by new tactics and renewed focus on having the best data underneath. And then last week we saw Slack start to cut off data APIs to startups like Glean. As this heats up, here are potential implications of the coming data wars.

Are we being reprogrammed by algorithms? Most definitely, and in this past edition we discussed the implications of our factory default primal human tendencies being overridden (the good and the bad).

Alright, lets jump into Edition #32 of Implications:

The development of AI as the ultimate safety layer.

Being targeted in some sort of nefarious digital crime is becoming a daily occurrence. Scam calls and texts, phishing emails, sophisticated hacks disguised as docusigns, colleagues with cloned voices calling for wire transfers, and the list goes on. While people with less experience of tech are especially vulnerable, all of us are facing these tricks and puzzles these days and the precision and velocity of these crimes will only grow. I have nearly fallen for a few of these attempts myself over the years. There is much focus on how AI will make things worse (fake media, cloned voices, extended context windows to socialize us for a long-game scam, etc), but how might AI ultimately save us?

Operating system-based AI safety layers to protect us. Whether we receive a scam via call, text or email, imagine a world where a “Safety Agent,” operating locally (on the device, to ensure privacy), is always scanning the data packets looking for evidence of digital tampering? Such an AI safety layer should have the ability to trigger a new type of OS-level warning or notification that is both audible and visible with a message along the lines of “This correspondence has a high likelihood of being a scam. Proceed with caution.” While phones are starting to do this based on certain phone numbers, future safety layers will be able to examine the content, the voice, the links, and any degree of socialization that may be fraudulent.

Safety layers can also screen for behaviors with implications for mental health. If you’re doomscrolling past your ordinary bedtime, or you’re exhibiting patterns of depression as you engage in social media, the AI-powered safety layer could give you a similar message that “snaps you out of it,” if only on a temporary basis. Especially for young kids and teenagers, this could be super useful and entirely possible with an OS-level safety layer. I also imagine that AI will be able to diagnose the biases of the algorithms that govern our media consumption and notify us when we’re being polarized.

AI-influenced judgment. We all know that texting or emailing while angry or anxious is often leads to our sentiment being misunderstood — causing issues between partners, friends, and colleagues. Perhaps the “safety layer” agents of the future can have settings that ultimately detect such moments and improve your judgement in real-time on how and when to communicate.

Enterprise “always on” compliance: There is an opportunity for new AI applications to emerge in the enterprise, either as browser extensions or OS-level capabilities, that actually liberate employees to be more proactive and take more risk. These omnipresent Compliance agents would calculate cost/benefit in real-time and give people confidence to do things that would have otherwise been prohibited or stalled by some compliance process. AI should ultimately encourage agency and risk-taking while providing the equivalent of a real-time compliance agent that keeps a brand safe while eliminating cycles of review and approval.

Can our AI safety layers have some empathy for us? Ok, now we’re getting a bit more “out there,” but AI sees more than what we end up saying or sending, it watches us struggle to articulate what we’re trying to say. Right now, as I type and then delete and then type again, my computer sees this internal monologue that you (the reader) never has. Perhaps AI will get to know us and help protect us in ways we cannot yet imagine?

How do we balance the “big brother” nature of safety layers with their benefits? It is somewhat disconcerting to think about an AI, albeit one that runs locally on our devices, to see everything we do or view. But this cognitive barrier is more psychological than anything else. After all, our device already has all of this information on it. It just can’t be accessed and leveraged in these new and powerful ways. But I do think the ultimate solution here is a series of AI models that run locally, always updated to detect the latest threats while never transmitting our personal information to the cloud.

What is the role of religion as we navigate a world we increasingly don’t understand?

While the history of humankind is not particularly peaceful, the collective mysteries and miseries of humanity have never been reflected back to us. The notion of “God” in almost all religions is a one-way transom, through which we are welcome to pray and send messages (or sacrifices in more ancient times), but we don’t get clear responses.

During a long run this weekend, I was thinking about the current state of the world.

Nations dropping bombs on each other.

Gangs refining increasingly lethal drugs that people knowingly consume to escape this small precious window of life we get.

Governments racing to build the capacity to extinguish all people, playing an existential game of chicken with human existence in the balance.

People grabbing and squandering wealth at a breakneck pace while most of our fellow citizens and humans live in poverty.

Online personalities spreading falsehoods that polarize people, or worse, to simply accrue pageviews and clicks.

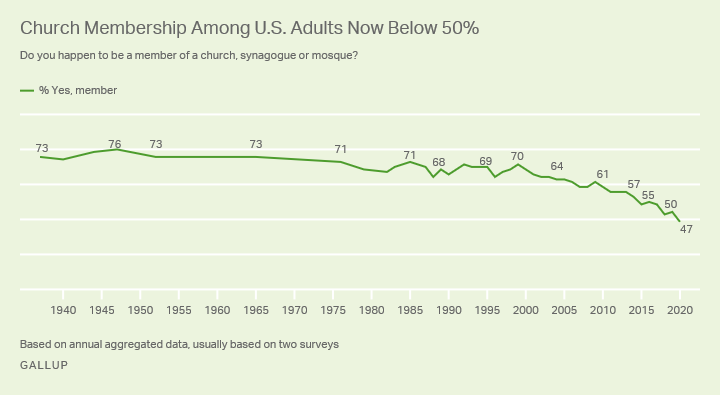

It struck me that we’re living in a world we increasingly don’t understand (or never understood), and the decisions we make as humans are increasingly against the interests of humanity. While religion is something people have turned to throughout history for some sense of morality and faith, the old doctrines also feel increasingly outdated. Regular church attendance has gone from ~49% in the 1950s to ~28% last year, and membership with any religious organization has fallen over the decades. In a modern world where every piece of information is at our fingertips, have people given up on the “one-way transom?”

Amidst all this, the advent of AI “super-intelligence” (many terms and definitions, but all researchers agree it’s coming fast) will be the first moment when something “all-knowing” (God-like) can express its opinion. As more of an Animist or Pantheist (awed by the power of nature) -- if not an atheist – I am surprised to find myself writing about religion in Implications. But one commonality across all religions is the depiction of God as “all-knowing,” omnipresent, etc…and I can’t help but wonder if one persistent feature (or bug?) of God is that it can’t respond nor say what it thinks of us. We cannot actually be judged nor clued into the mysteries while alive.

Fast forward to today, people can ask and discover what ChatGPT thinks of them based on all of their inquiries and interactions. Humans will be increasingly interested in what their AI agents and companions think of them as context windows for memory grow and models improve. For the first time, we’ll get a response from something “all-knowing” about us. The transom with ASI (artificial super-intelligence) will work both ways. As AI works in ways that exceed our comprehension and becomes more mysterious, will its perspective carry weight? Will its advice become a self-fulfilling prophesy? Will the next great religion-like relationship be one that is personalized to each of us? Before you scoff, consider that perhaps tens of thousands of people now view ChatGPT as a God.

The truth behind every founder is a founding team.

While I am proud to be a founder, I’ve always had issues with the official “founder” or “co-founder” titles because, in all truth, there is a “founding team” behind any successful business. In most cases, the founders of every function of a new company take an incredible amount of risk in their careers to build something from zero to one. They may not have come up with the original idea, signed the first lease, registered the domain name, or raised the initial seed capital, but they were critical ingredients and possessed amazing boldness, vision, ambition, and tolerance for risk.

In my experience starting Behance back in 2007, our founding team was the difference between this venture being a success or a failure. What is now a thriving network of creative professionals approaching 60 million members – a platform that has impacted countless careers and connected artists across genres in countless ways – started as a wayward venture with extremely inexperienced people building something we were not qualified to build.

We were lost for quite some time until a few key leaders joined us, including one particular ambitious, low-ego engineer named Bryan Latten. Bryan came from a family that valued hard work and never took a promising career for granted and he had a steady job at Lockheed Martin. So it must have been hard for him to make the leap and join our passionate yet clueless crew. But Bryan took the risk and jumped in, overhauling the architecture of our stack, refactoring critical parts of code, and instituting best practices in how we built and operated. He was very much a founding member of our team.

Most importantly, Bryan became a much-needed confidante and critic — always direct, passionate about quality and dispassionate about where the ideas came from. As our engineering team scaled, Bryan demonstrated the ability to manage others. If he hadn’t led us through these early years, I don’t think we would’ve found our way. Bryan epitomized the fact that “founding team” is a more accurate notion than “founder.” He founded so much of what Behance became, bringing essential skills, setting our culture, and finding our way through challenge after challenge.

Bryan joined Adobe upon our acquisition in 2012 and was quickly promoted to become one of the company’s youngest Vice Presidents. He left several years later to co-found a startup with another early Behance leader, COO Will Allen. (While I was sad to see them go, I was proud to be one of their first seed checks). Their startup was ultimately acquired by another startup. And then, just eleven months ago, Bryan called me with the startling news that he had been diagnosed with brain cancer. It was an emotional call as Bryan explained how he was preparing for the two possible paths ahead with the same directness and grounded optimism that he brought to every challenge we shared in our Behance days. Bryan gave this fight everything he could, but we all lost Bryan over Memorial Day weekend. Two young daughters lost an incredible father, his wife lost the best possible partner, his parents, sisters, family and friends lost a loyal and remarkable human being, and my former colleagues at Behance lost one of our founding members who made it all possible.

Over a dozen of us from the early days of Behance gathered at Bryan’s funeral to pay our respects. The common theme from our conversations was gratitude for Bryan’s friendship, leadership, and for saving all of our asses countless times over the course of our journey together. We all know that our journey and the success we shared, which has provided security for our families and opportunity in our careers, would not have been possible without Bryan.

The lesson? Build your founding team with precision and care and see them for who they are: founders. Perhaps “founding team” can become a more common part of the vernacular of entrepreneurship. Now, as I find myself starting A24 Labs and building an engineering, product, and design team, albeit within a larger organization, I have newfound appreciation for the founding team I have assembled. Each of us has taken a risk in our careers and is driven by ambition and vision for something that does not yet exist. Each of us believes that something new is needed in the industry of storytelling. And each of us feels in service to filmmakers and the broader teams that are required to bring world class stories to life. Here’s to Bryan, and to all the founding teams out there taking the leap and doing the work to push the world forward.

Ideas, Missives & Mentions

Finally, here’s a set of ideas and worthwhile mentions (and stuff I want to keep out of web-scraper reach) intended for those I work with (free for founders in my portfolio, and colleagues…ping me!) and a smaller group of subscribers. We’ll cover a few things that caught my eye and have stayed on my mind as an investor, technologist, and product leader (including my latest thesis points as an angel investor, some thoughts on what humans CANNOT be trained to do (as a proxy for what will always be out of reach of AI), and a series of data provocations that got me thinking... Subscriptions go toward organizations I support including the Museum of Modern Art. Thanks again for following along, and to those who have reached out with ideas and feedback.